- MACH,

- JAMstack

Serverless Architecture: Everything You Need to Know About Serverless

In the beginning (well, the 1960s, anyway), computers filled rack after rack in vast, remote, air-conditioned data centers, and users would never see them or interact with them directly. Instead, developers submitted their jobs to the machine remotely and waited for the results. Many hundreds or thousands of users would all share the same computing infrastructure, and each would simply receive a bill for the amount of processor time or resources she used[1].

In those days, companies did not prefer to buy and maintain their own computing hardware. Therefore, they share their computing power with remote machines, which are owned and run by a third party.

What is happening in today’s world?

With the evolving, computers become more powerful and shared, pay-per-use access to computing resources is a very old idea[2]. Now, we encounter the word cloud. With the cloud, you buy time by renting computing (cloud computing), instead of buying a computer.

Cloud computing is on-demand access, via the internet, to computing resources—applications, servers (physical servers and virtual servers), data storage, development tools, networking capabilities, and more—hosted at a remote data center managed by a cloud services provider (or CSP)[3].

By renting computing, the cloud basically makes your problem someone else’s problem. That is, instead of sinking large amounts of capital into physical machinery, which is hard to scale, breaks down mechanically, and rapidly becomes obsolete, you simply buy time on someone else’s computer, and let them take care of the scaling, maintenance, and upgrading[4].

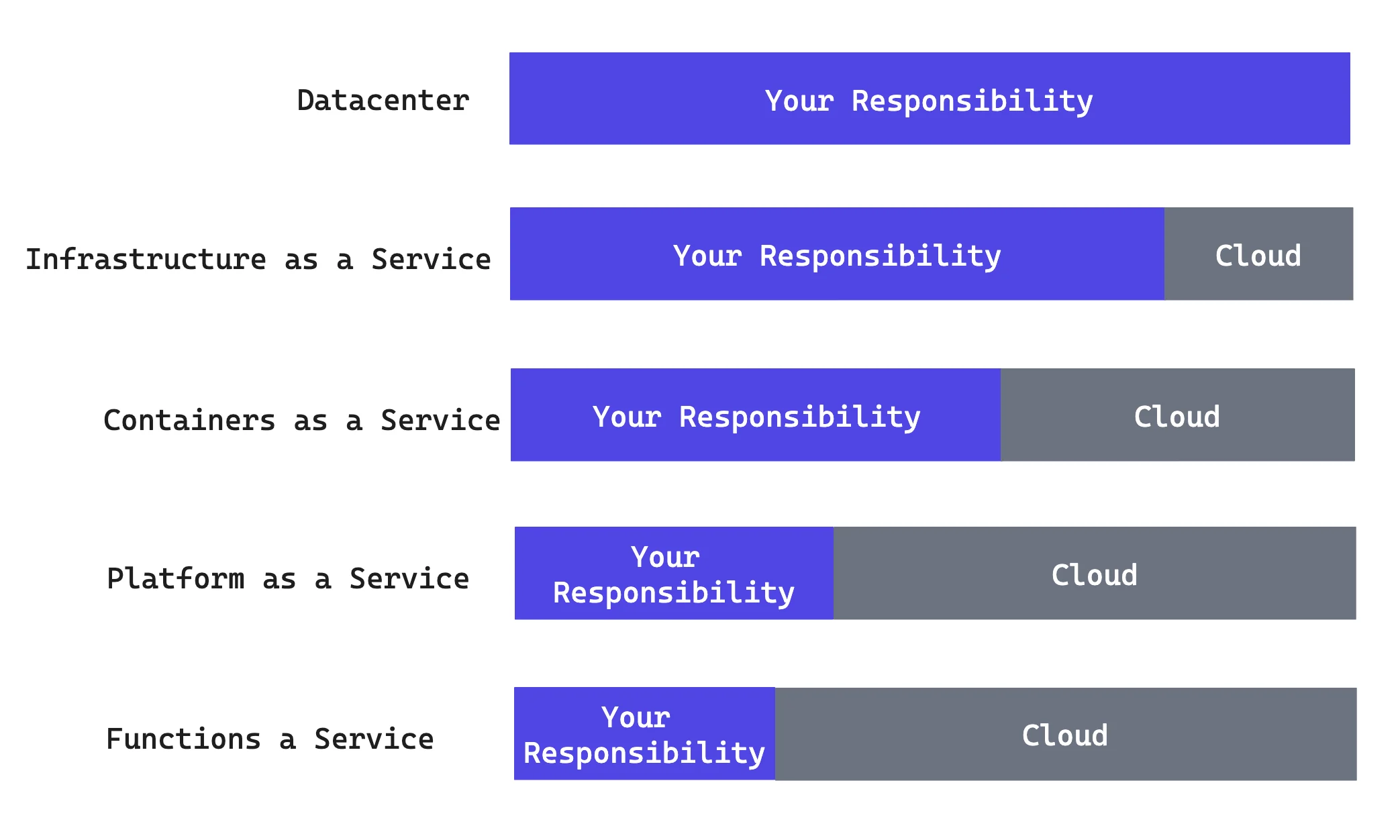

Let’s consider you want to store your data by using Google Cloud, instead of buying a server. You can wonder how much responsibility you will have, or, in reverse, how much responsibility Cloud will have. Truthfully, that depends on your needs and budgets because there are different cloud computing service models.

Cloud Computing Service Models

As you can see, all these models include ‘as a service.’ It refers to the difference between cloud computing and traditional IT.

In traditional IT, an organization consumes IT assets - hardware, system software, development tools, applications - by purchasing them, installing them, managing them, and maintaining them in its own on-premises data center[5].

In cloud computing, the cloud service provider owns, manages, and maintains the assets; the customer consumes them via an Internet connection and pays for them on a subscription or pay-as-you-go basis.

The responsibilities depend on the model which you choose.

If you want to buy a server to support your business applications and provide services, you must take all responsibility for building and managing infrastructure and other things. Building and managing infrastructure requires dedicated DevOps resources to oversee and manage.

1. Infrastructure as a Service(IaaS)

IaaS is on-demand access to cloud-hosted computing infrastructure - servers, storage capacity, and networking resources - that customers can provision, configure and use in much the same way as they use on-premises hardware[6].

You can ask Cloud to give you a computer that has 4 CPU cores and 16 GB RAM. The cloud is responsible for keeping open the server, its disks, and its network. Your responsibilities are to configure the settings of networking and storage. In addition, it is your responsibility to ensure the safety of the machine. While the application is idle, it can create costs.

Examples: Amazon Web Services, Google Cloud, IBM Cloud, Microsoft Azure

2. Containers as a Service(CaaS)

You maybe have no idea about what a container is. In simple terms, a container is a package of software that includes all dependencies: code, runtime, configuration, and system libraries so that it can run on any host system.

On the other hand, Containers as a Service(CaaS) is a cloud-based service that allows software developers and IT departments to upload, organize, run, scale, and manage containers by using container-based virtualization[7].

CaaS is essentially automated hosting and deployment of containerized software packages. Without CaaS, software development teams need to deploy, manage, and monitor the underlying infrastructure that containers run on.

Imagine you have an application, and you want to make it run for your customers. In that model, you must containerize your application and give it to Cloud. Cloud deploy and scale your containerized applications to high-availability cloud infrastructures. Cloud decides how many instances work depending on the number of requests. You don’t directly deal with the machine itself.

CaaS enables your development teams to think at the higher-order container level instead of mucking around with lower infrastructure management. This brings your development team better clarity to the end product and allows for more agile development and higher value delivered to the customer. They can focus on product rather than infrastructure management. This increases your team's productivity.

Examples: Google Cloud Run

3. Platform as a Service(PaaS)

PaaS provides a cloud-based platform for developing, running, and managing applications. It includes the underlying infrastructure, including compute, network, and storage resources, as well as development tools, database management systems, and middleware[8].

In other words, the cloud services provider hosts, manages, and maintains all the hardware and software included in the platform - servers (for development, testing, and deployment), operating system (OS) software, storage, networking, databases, middleware, runtimes, frameworks, development tools - as well as related services for security, operating system, and software upgrades, backups and more[9].

If you need immediate access to all the resources needed to support the entire application lifecycle, including designing, developing, testing, deploying, and hosting applications, PaaS could be a good choice for you.

However, Platform as a service is concerned with explicit ‘language stack’ deployments like Go, Python, Ruby on Rails, or Node.JS, whereas Container as a Service can deploy multiple stacks per container. That is its limitation

Examples: Google App Engine, HEROKU

4. Functions as a Service(FaaS)

While PaaS provides a complete platform for developers to build, test, and deploy their applications. Faas only provides the ability to run code in the cloud.

FaaS is a cloud computing model that enables you, as a cloud customer, to develop your applications and deploy functionalities. However, you are only charged when the functionality executes.

You don't have to manage complex infrastructure like physical hardware, virtual machine operating system, and web server software management, which are needed for hosting software applications. These are all handled automatically by the cloud service provider.

Let’s try to understand how it works!

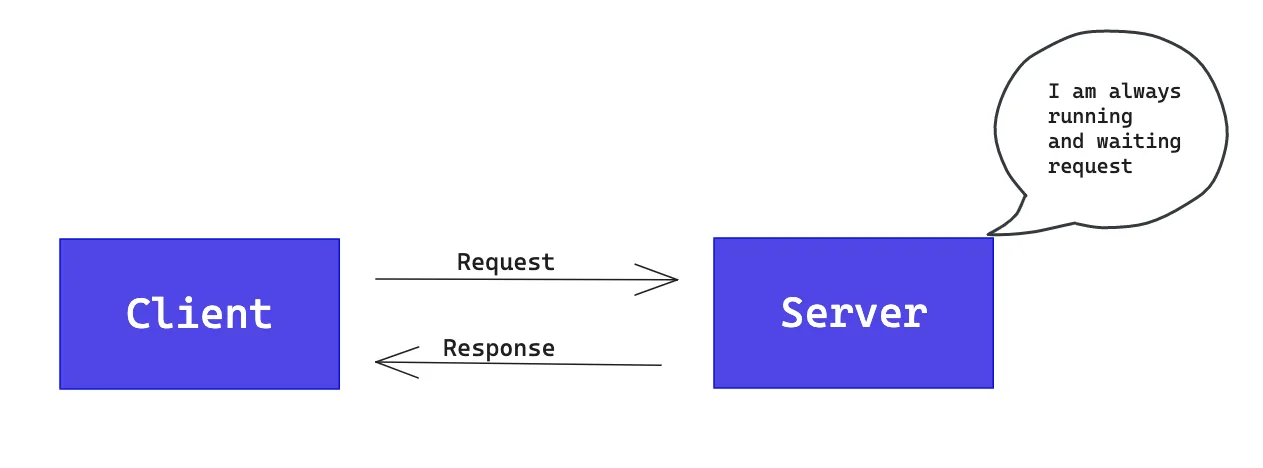

In the client-server model, the server is always running and listens to any HTTP request. Even if there is no request, this server is always up and running.

In that model, the server is consistently running in the background whether it has been used or not.

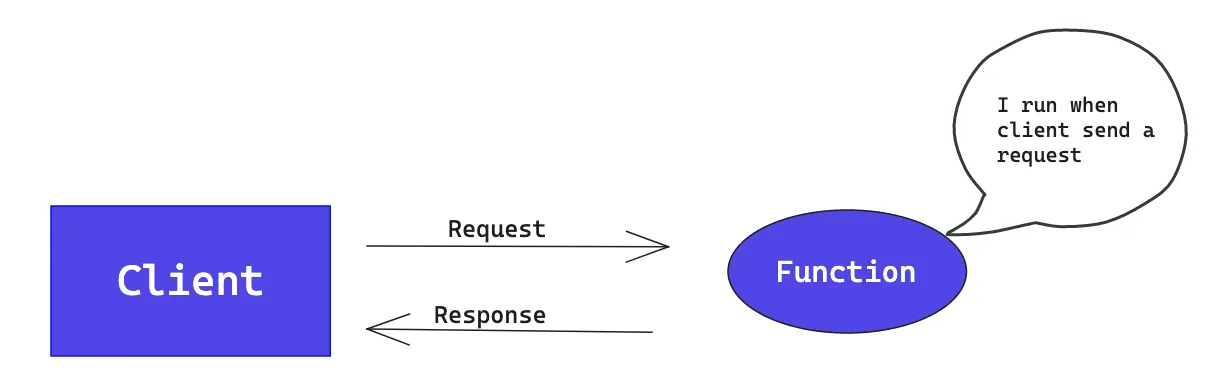

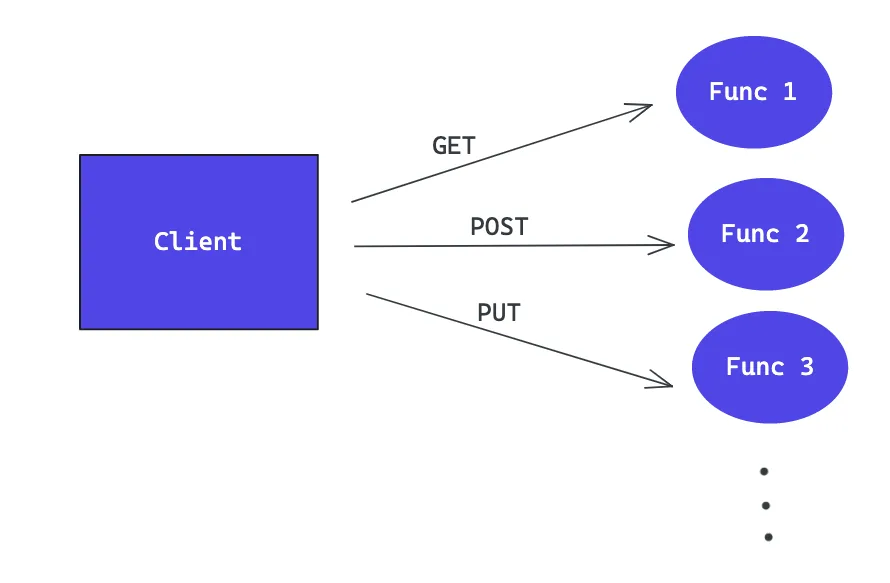

On the other hand, in the functions as a service model, there is no consistent running server. When your client makes a request, your cloud provider is going to create a function.

Developers can deploy these pieces of code, known as functions, on-demand without thinking about maintaining application servers. Simply say that the function is just a block of functionality that contains a little bit of code.

Let’s consider client wants to save data to the database. Therefore, she sends a POST request. That function is going to deal with validating POST requests, adding data to the database, and sending responses back.

The important point is before the client sends a request, this function does not exist. As said before, the cloud provides create it when the client sends a request. Therefore, it never runs in the background.

Here are the steps:

Client send request

The cloud service provider creates the function

Function handles the request and saves data to the database

The function sends the response back to the client

If there are no more HTTP requests, the function disappears.

Because the function is created when you need it, you pay only for the compute time. In other words, you pay only for the actual usage of resources. However, the per CPU second is more expensive than other services. You pay for the time and resources as much as the function is running. Cloud provider keeps the unit price higher for difficulties such as deploying and upgrading the entire application and preparing its instance.

The provider can increase or decrease instances depending on the request number for efficiency. This is the responsibility of the provider, and you do not need to worry about instances. It can spin up more functions to handle those requests:

To sum up, FaaS allows developers to execute functions in response to specific events. Everything besides the code—physical hardware, virtual machine operating system, and web server software management—is provisioned automatically by the cloud service provider in real-time when execution starts. It stops when execution stops. Billing starts when execution starts and stops when execution stops.

Remember, you only pay for your actual usage of resources!

All is well! However, what is the relationship between the function-as-a-service model and the serverless?

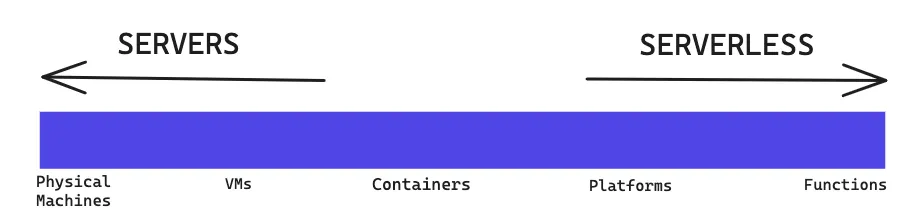

Before starting, you need to know that ‘serverless’ and ‘function as a service’ are not the same thing. FaaS is often confused with serverless computing. However, FaaS is a subset of serverless.

Serverless is focused on any service category, be it computing, storage, database, messaging, API gateways, etc., where configuration, management, and billing of servers are invisible to the end user[10].

FaaS, on the other hand, while perhaps the most central technology in serverless architectures, is focused on the event-driven computing paradigm where application code, or containers, only run in response to events or requests[11].

Examples: IBM Cloud Functions**,** Amazon's AWS Lambda, Google Cloud Functions, Microsoft Azure Functions, CloudFlare workers

Let’s look more closely at Serverless by considering FaaS as a subset of it.

Serverless

Serverless does not mean there is no server. It’s just somebody else’s server. The point is that you don’t have to provision and maintain that server; the cloud provider takes care of it for you[12].

Serverless computing is a cloud computing model that offloads all the backend infrastructure management tasks–provisioning, scaling, scheduling, patching—to the cloud provider, and, with serverless, you make your developers free to focus all their time and effort on the code and business logic specific to their applications.

Serverless runs your application code on a per-request basis only and scales the supporting infrastructure up and down automatically in response to the number of requests.

Serverless computing changes the economics of systems by moving the calculation from “cost per byte” to “cost per CPU cycle.” This means, or should mean, that we need to consider very different kinds of optimization”[13]. As mentioned in FaaS, with serverless, customers pay only for the resources being used when the application is running.

Consider you have developed a social media application that allows users to upload images and apply filters. You want to deploy this application as soon as possible. You want a fast and efficient way to enter the market with your application and meet your customers.

In a serverless model, you could utilize services like AWS Lambda, Azure Functions, or Google Cloud Functions to handle image processing. These are the functions we mentioned in FaaS.

When a user uploads an image, a serverless function can automatically trigger, resize the image, apply filters, and store it in a database or object storage. You don't have to manage servers to handle this workload, and the system automatically scales based on the number of incoming image uploads. One or a million requests it doesn't matter to you except billing. The cloud provider manages all these scaling issues for you. This approach allows you to focus on the image processing logic rather than infrastructure concerns.

Put it in a nutshell; Your start-up can be born in the cloud!

However, serverless does not just consist of computing programs. For example, there are serverless databases it is scaled automatically, and you pay for your actual usage of resources.

In brief, in order to know what we can call a serverless, it is important to know the main characteristics of serverless:

There is no need for infrastructure management and ops

Pay for the actual usage of resources

Automatic scaling according to request number

However, of course, nothing is a silver bullet. You need to understand your needs and make trade-offs. After this long discussion, let’s help you to make the trade-off by summarizing the pros and cons of serverless.

Benefits of Serverless

No Server Management: You don't have to worry about server provisioning, scaling, or maintenance tasks, as the cloud provider handles these responsibilities for you.

Event-Driven: As mentioned in the FaaS section, when you choose to use serverless computing, the functions are executed in response to specific events or triggers. Event-driven cloud-native applications can be implemented by using microservices, as well as by using serverless computing platforms such as Amazon Lambda and Azure Functions. This is because these platforms are natively event triggered. The use of serverless is especially useful when the frequency of event occurrence is low and when we can significantly save on infrastructure costs[14].

No Consistent Running Server: You pay only for the actual usage of your functions or services, rather than for idle server time. This can be beneficial for you when your application is under a varying or unpredictable workload.

Automatic Scaling: Scaling is handled automatically by the cloud provider, ensuring resources are allocated efficiently. Cloud server providers can automatically scale your application in response to workload fluctuations. They can handle sudden spikes in traffic without manual intervention, ensuring high availability and performance.

Fast Development and Deployment: Serverless platforms often provide simple deployment mechanisms, allowing you to quickly iterate and release new features. You can focus on writing small, modular functions that perform specific tasks and combine them to build complex applications.

Until that point, it can be seen as ‘Great Power Less Responsibility.’ It is a stubborn fact; it is a great power, and you have less responsibility. However, of course, to gain this power, you need to renounce some things.

Drawbacks of Serverless

Cold Start Latency: Serverless platforms spin up instances of your functions on-demand, which can introduce latency when handling the first request after a period of inactivity. In the period of inactivity, the function does not exist. When the client sends an HTTP request, your cloud provider creates a function, which takes quite a bit of time. This leads to a decrease in the performance of your application. This delay, known as a cold start, can impact real-time or latency-sensitive applications.

Lock-In: Serverless platforms are provided by cloud vendors, and each vendor has its own implementation and set of features. If you build your application using a specific serverless platform's proprietary features or integrations, it can become challenging to migrate to a different provider in the future. This vendor lock-in can limit your flexibility and increase switching costs. Maybe serverless is not something you want to use.

Not Suitable for Long-Term Tasks: Serverless platforms are primarily designed for executing short-lived functions rather than long-running processes. If your application requires continuous, long-running tasks or background processes, serverless might not be the best fit. Serverless functions typically have execution time limits imposed by the cloud provider. If your function requires longer processing time, you might need to re-architect your application. Therefore, Long-running tasks can be more cost-effective and manageable on traditional servers or virtual machines.

Limited Control Over Infrastructure: Serverless abstracts away the underlying infrastructure, which can be an advantage. However, it also means you have less control over the infrastructure stack. If your application requires fine-grained control over networking, operating system configurations, or specific hardware requirements, serverless might not provide the level of control you need.

When you make the tradeoff between the pros and cons of serverless, you must consider your specific application requirements before adopting a serverless architecture. Depending on the use case, traditional infrastructure might be more suitable than serverless.

Use Cases of Serverless Architecture

APIs for Web and Mobile Applications

Serverless can be an excellent choice for building web and mobile applications with dynamic workloads. Websites we interact with every day likely use functions to manage event-driven processes. Sites that load dynamic content frequently use functions to call an API and then populate the appropriate information. Often, websites that require user input, like an address for shipping, use functions to make an API call to perform the backend verification needed to validate that the information—in this example, the address—input by the customer is correct[15]. It allows you to handle user requests, process data, and serve content without managing servers. You can build serverless APIs, handle authentication and authorization, process user input, and integrate with databases and other services.

Multimedia and Data Processing

Serverless architecture can be leveraged for image and video processing tasks. For instance, you can build an application that automatically resizes or compresses images upon upload, applies filters, or extracts metadata. Services like AWS Lambda, Azure Functions, or Google Cloud Functions can process the media files and store them in appropriate formats or locations.

Serverless architecture is well-suited for real-time data processing scenarios. For example, you can build an application that ingests streaming data from sources like social media feeds. AWS Lambda, Azure Functions, or Google Cloud Functions can process and analyze the data in real-time, triggering actions or updating databases accordingly.

Serverless can also allow for easy intake and processing of large amounts of data, meaning that robust data pipelines can be built with little to no maintenance of infrastructure. Developers can use functions to store the information in a database or connect with an API to store the data in an outside database. Because developers only have to write a single function and are only charged when the events are triggered, they can save significant time and cost while developing applications.

The Bottom Line

Serverless and Function-as-a-Service (FaaS) are closely related concepts, with FaaS being a specific implementation of serverless computing. FaaS is a computing model within the broader serverless paradigm that focuses on executing individual functions or units of code in response to specific events or triggers.

Serverless computing encompasses a broader range of services and capabilities beyond just executing functions. It includes managed services for databases, storage, messaging, and other components, which can be used in conjunction with FaaS to build complete applications.

Besides serverless computing, there are also serverless databases that enable developers to eliminate the need to provision and manage database servers, allowing your application to handle spikes in traffic and workload seamlessly. Therefore, serverless does not always refer to computing.

FaaS platforms, such as AWS Lambda, Azure Functions, or Google Cloud Functions, provide the infrastructure and runtime environment to execute functions in response to events, such as an HTTP request, database update, or a timer-based trigger. These platforms handle the scaling, resource allocation, and management of the underlying infrastructure, allowing developers to focus solely on writing the function code.

The key idea behind FaaS is that developers can write discrete pieces of code (functions) that perform specific tasks or respond to specific events. Each function is created and then executed in isolation, and the platform dynamically scales the resources to accommodate the incoming workload. If there is no task or event, the function disappears.

Therefore, FaaS is a key component of the serverless paradigm, which encompasses a broader range of services and capabilities beyond just function execution. Together, FaaS and other serverless services enable developers to build scalable, event-driven applications with reduced operational overhead.

Serverless enables developers to focus solely on writing code and building applications by abstracting away the underlying infrastructure and building applications. It offers numerous benefits, such as increased scalability, reduced operational overhead, improved developer productivity, and cost optimization with pay for actual usage of resources policy.

Therefore, if you are a developer ;

who do not want to worry about provisioning or managing servers and just want to focus on writing code,

who do not want to handle infrastructure management tasks such as server provisioning, scaling, and monitoring,

who has a small-scale application and wants to immediately deploy your application,

who do not want to worry about scaling the application based on the incoming workload,

serverless can be useful for you to meet your requests.

However, serverless is not without challenges like cold start latency, lock-in, being not suitable for long-term tasks, and limited control over the infrastructure.

Despite these challenges, serverless computing has gained significant traction and continues to evolve rapidly. Its ability to abstract away infrastructure concerns, improve scalability, enhance developer productivity, and optimize costs make it an appealing choice for a wide range of use cases, from small-scale applications to enterprise-grade systems. As technology advances and the serverless ecosystem matures, we can expect to see further advancements, improved tooling, and broader adoption of this paradigm.

Learn everything you need to know about MACH architecture

Frequently Asked Questions About Serverless Architecture

What Is Serverless Architecture?

Serverless architecture is a cloud computing execution model where a cloud provider dynamically manages and allocates resources for running applications. In this model, developers don't need to manage or maintain servers, allowing them to focus on writing code and building features.

What Is a Serverless Framework?

A serverless framework is a cloud computing architecture that enables developers to build and deploy applications without having to manage the underlying infrastructure. This approach allows for scalable, cost-effective solutions by automating the provisioning and management of resources. Developers can focus on writing code for their application's core functionality while the framework takes care of tasks like scaling, patching, and capacity management. By leveraging a serverless framework, businesses can improve operational efficiency, reduce costs, and quickly adapt to changing market demands.

What Is a Serverless Platform?

A serverless platform is a cloud-based environment that allows developers to build, deploy, and run applications without the need to manage or provision servers. It abstracts away the underlying infrastructure, enabling developers to focus on writing code for their application's core functionality while the platform takes care of tasks like scaling, patching, and capacity management. Serverless platforms typically offer automatic scaling to handle varying workloads, event-driven execution, and a pay-per-use pricing model, making them a cost-effective and efficient solution for developing modern applications that respond quickly to changing market demands.

What Is a Serverless Application?

A serverless application is a software solution that leverages serverless computing architecture, allowing developers to create and deploy applications without managing or provisioning servers. These applications rely on cloud-based services, such as AWS Lambda or Google Cloud Functions, to execute code in response to events like API requests or file uploads. By abstracting the underlying infrastructure, serverless applications enable developers to focus on building core functionality while the serverless platform handles tasks like scaling, patching, and capacity management. As a result, serverless applications offer cost savings, improved scalability, and faster development cycles for businesses seeking to efficiently build and maintain modern, event-driven applications.

What Is an Example of a Serverless Application?

An example of a serverless application is a real-time image processing system that uses AWS Lambda to automatically apply filters or transformations to uploaded images. When a user uploads an image to an Amazon S3 bucket, it triggers a Lambda function that processes the image according to predefined rules, such as resizing, cropping, or adding watermarks. The processed image is then saved back to the S3 bucket or delivered to the end user. This serverless application eliminates the need for managing servers or infrastructure, allowing developers to focus on building the image processing logic while AWS takes care of scaling, patching, and capacity management. As a result, businesses can achieve cost savings, improved scalability, and faster development cycles for their image processing needs.

What Is an Example of a Serverless Framework?

AWS Lambda is a popular example of a serverless framework that allows developers to build and deploy applications without managing servers. With Lambda, developers can write code in various languages, such as Python, Node.js, or Java, and execute it in response to events like API requests or file uploads. AWS automatically handles the scaling, patching, and capacity management of the underlying infrastructure, allowing developers to focus on creating application logic. By using AWS Lambda, businesses can achieve greater operational efficiency, reduced costs, and faster development cycles, making it an ideal choice for building modern, event-driven applications.

Why Use Serverless Framework?

Using a serverless framework offers multiple benefits, including cost savings, scalability, and faster development cycles. By eliminating the need to manage servers and infrastructure, developers can focus on writing application logic, leading to reduced operational overhead and maintenance costs. Serverless frameworks automatically scale with incoming traffic, ensuring optimal performance without manual intervention. This ability to handle fluctuating workloads makes them an attractive option for businesses aiming for agility and responsiveness. Additionally, serverless frameworks often employ a pay-per-use pricing model, helping organizations save money by only paying for the compute resources they actually consume. Overall, adopting a serverless framework streamlines development processes, reduces costs, and improves application performance.

How Does Serverless Differ from Traditional Server-Based Architectures?

In traditional server-based architectures, developers are responsible for managing and maintaining servers, whereas in serverless, the cloud provider handles these tasks. This allows developers to concentrate on application development without worrying about server management, scaling, or capacity planning.

What Is the Difference Between Server and Serverless?

The primary difference between server and serverless architectures lies in how they manage infrastructure and resources. In a traditional server-based architecture, developers are responsible for provisioning, maintaining, and scaling servers to run their applications, which can lead to increased operational overhead and costs. On the other hand, serverless architecture abstracts away the underlying infrastructure, allowing developers to focus on writing application code while the serverless platform handles tasks like scaling, patching, and capacity management. This shift in responsibility enables greater operational efficiency, cost savings, and faster development cycles in serverless environments, making them an attractive option for businesses seeking to build modern, event-driven applications.

What Are the Benefits of Using Serverless Architecture?

Some benefits of using serverless architecture include cost efficiency, scalability, faster development, and reduced maintenance.

Cost efficiency: You only pay for the actual usage of resources when your code is executed.

Scalability: The cloud provider automatically scales resources based on demand.

Faster development: Developers can focus on writing code instead of managing servers.

Reduced maintenance: No need to worry about server updates, patches, or security.

Are There Any Drawbacks to Using Serverless Architecture?

Some drawbacks of serverless architecture include limited customization, cold starts, vendor lock-in, and debugging and monitoring challenges.

Limited customization: Cloud providers may impose restrictions on runtime environments and available resources.

Cold starts: Functions may take longer to execute initially if they have not been recently used.

Vendor lock-in: Switching between cloud providers can be challenging due to differences in their serverless offerings.

Debugging and monitoring challenges: Traditional tools may not work well with serverless architectures.

What Are Some Common Use Cases for Serverless Architecture?

Common use cases for serverless architecture include web applications, APIs, data processing, IoT applications, and chatbots.

Web applications: Build and deploy scalable web applications without managing servers.

APIs: Create and manage RESTful APIs using serverless functions.

Data processing: Process large volumes of data in real-time with event-driven functions.

IoT applications: Handle data from IoT devices and manage device communication.

Chatbots: Build intelligent chatbots using serverless functions and AI services.

How Do I Get Started with Serverless Architecture?

To get started with serverless architecture, choose a cloud provider (e.g., AWS Lambda, Google Cloud Functions, or Azure Functions) and learn their respective serverless offerings. Next, familiarize yourself with the chosen provider's documentation, tutorials, and best practices for building and deploying serverless applications.

What Are the Best Practices for Optimizing Serverless Applications?

Some best practices for optimizing serverless applications include minimizing function package size, monitoring and optimizing resources, implementing error handling, and keeping functions focused.

Minimize function package size: Smaller packages lead to faster deployments and reduced cold start times.

Use caching: Cache frequently accessed data to reduce latency and improve performance.

Monitor and optimize resources: Regularly assess resource usage and adjust accordingly to minimize costs.

Implement error handling: Ensure your application can handle errors gracefully and recover from failures.

Keep functions focused: Design functions to perform a single task, making them easier to maintain and update.

Learn everything you need to know about MACH architecture